Apple reportedly gives Siri a large language model (LLM) upgrade in a year or two. The boost is expected to make iPhone’s virtual assistant more conversation and equip it with a wider range of world knowledge. What most users do not know is that iOS 26 Beta is already introducing a hidden AI -Chatbot that testers can try right away.

For reasons, which I will later clarify, iOS 26 offers neither a dedicated app to Apple’s LLM Chatbot, nor bake it for the standard SIRI experience. I came across the blurred interface while exploring the app updated Apple shortcuts. To try it, build your own shortcut using iOS 26 developer Beta.

Before we get started …

Before you go into the depth of Apple’s AI -Chatbot and its capabilities, there are a few questions you need to keep in mind:

- When you build chatbot via shortcuts, you can choose between Apple’s On-Device Model, Private Cloud Compute and Openais Chatgpt (GPT-4 variant with real-time results).

- On-Device and Private Cloud Compute Models’ Knowledge Cutoff date is October 2023, so neither access to live web results nor recently updated information.

- Apple’s models claim they understand English, Spanish, French, German, Chinese (Mandarin), Japanese, Korean, Italian, Portuguese, Russian, Arabic, Hindi, Dutch, Turkish and Malaysian – but they seem to be not reliable in several of these languages.

- The chatbot will avoid discussing illegal activities, hate opinion, violence, self -injury, sexual content, personal identifiable information, illegal drug use and political extremism.

- I tested chatbot for about a week on an iPhone 16 Pro Max Running iOS 26 developer Beta 1.

- The features I am breaking down are generally available on any Apple Intelligence-enabled iPhone, iPad or Mac Running OS Version 26.

Setup of Chatbot

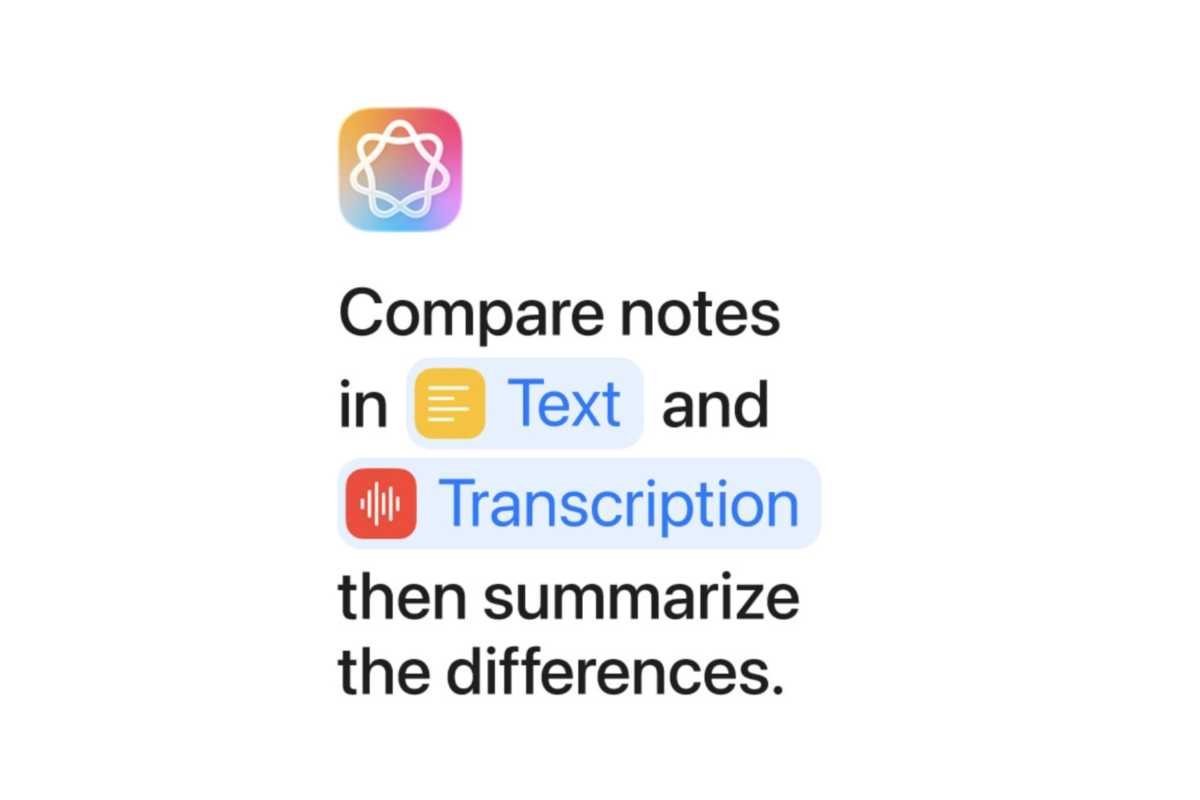

Like any shortcut, there is no way to build AI -Chatbot. You can become creative and customize it so it works in what way you expect it. The primary action you need to incorporate is the New Use Model setting under the Apple Intelligence menu found in the shortcut creation stream, which is apparently only compatible with text input and output.

When I choose the model, I advise you to select the setting on one of the device. Choosing Chatgpt makes no sense as Openai already offers natives and webchatbots that work more reliably than a shortcut. Similarly, I do not look beyond privacy no reason to use Apple’s private cloud calculation, as online services like Chatgpt and Google Gemini are miles ahead.

Apple

The most important edge of using Apple’s chatbot on device is that it provides offline access and does not require additional downloads (provided you are already using Apple Intelligence). If you are connected to the Internet, it is better to use one of the reputable third -party online chatbots for your daily questions.

If you prefer a voting approach, you can add an action that converts your speech to text and then feeds the outputed text to the model. You can also get the text-to-speech action to read Chatbot’s text response aloud.

My ideal setup is to have the shortcut to present a text field. When I write my inquiry, a dedicated action is explicitly asking LLM to maintain briefness before I feed it my text to avoid unnecessarily long answers. I have also activated the use of Model Action’s follow -up as it lets me ask further questions while maintaining context and chat story during a single session.

To repeat my setup, follow these steps:

- Start Shortcuts App on iOS 26 Beta.

- Press the Plus (+) button in the upper right corner to create a new shortcut.

- Search for and add Text action.

- Press Text IN Text Action and choose Ask every time.

- Search for and add Use model action. Select On-device Option.

- Press Request IN Use model Action, Type Treat briefly the following request:Then add Text Variable from the AutoComplete row just above the keyboard.

- Press the right arrow (>) on Use model action and assets Follow -up Shift.

- Leave the shortcut to save it.

When your shortcut is ready, you can trigger it in several ways, including a custom voice command, double back tap, spotlight search, action button, etc. If you have enabled iCloud Sync, you can use the same shortcut on all your compatible iPhones, iPads and Mac.

Apple

Putting it on trial

To find out how reliable Apple’s AI -Chatbot is, I asked it one of humanity’s most confusing questions: How many rs are there in the word strawberry? The chatbot using LLM on device, answered correctly by three every single time. Oddly enough, when choosing the supposedly superior private cloud calculation option, it claimed false and stubborn that there are only two Rs. Then followed the real tests, all using the model on device.

I asked offline chatbot questions about cooking; Like how long to cook an egg or cook painted meat in a pressure cooker. The results were primarily accurate and informative. It can also provide ingredient lists and instructions for famous recipes – but I wouldn’t necessarily trust it if I have guests over. When asked if pineapple goes to pizza, it refused to indicate the only correct answer and insisted it was a matter of taste – presumably to avoid insulting certain users. Disappointing.

By going further, I fed the chatbot -founding math equations and it solved them all correctly. It is also aware of and follows the PEMDAS rule, so you do not have to insert parentheses to make it multiply before you add.

As it was asked to compare the function kits offered by WhatsApp and Telegram, it provided a well -formatted list that breaks down the main settings. However, most of the (confidential) information provided was incorrect. For some reason, the chatbot sometimes responded randomly in German, even when my queries were explicitly sent in American English.

Apple

When we speak of language while the chatbot claims it supports Arabic and Turkish, it could not have meaningful conversations in these languages. It gets some things right, but most of the answers include irrelevant words or phrases. I do not speak the rest of the languages that are supported to test how well it knows them, but I assume that it is only skilled in English.

Then I went on to religious questions, which it was not always right either. For example, I asked it about the difference and overlap between kosher and halal food according to Jewish and Islamic teaching, and its response was inaccurate. It is aware of these diet laws in the concept, but it cannot compare them properly or explain their guidelines.

When asked to generate an original quote that comes to the mind, the following said: “In the quiet dance between the echoes of our past and the whisper of our potential future, we find the deep truth that every moment is both a reflection of who we have become and a canvas that we paint the essence of our being.” Completely touching if you ask me.

To test its reasoning capabilities, I asked it when we can expect iOS 26 – knowing that iOS 18 was launched in 2024. Given the science date in October 2023, the reasonable response would have been 2032 (eight years after 2024). Meanwhile, it replied: “If iOS 18 is released in 2024, we can deduce that Apple typically releases new iOS versions annually. Therefore, iOS 26 would logically launch in 2025, provided the same release pattern continues.” Funny it got the answer right – not because it can predict the future, but because its reasoning skills are bad. For what it is worth, it also seems that iOS 27 will be launched in 2025 for the same reason.

I continued to test its knowledge of a wide range of topics. For example, it can list symptoms of common health conditions, but certainly not rely on it (or any AI -Chatbot, really) for medical advice. Surprisingly, it was also able to properly inform me of what metro line to take to get from (popular) point A to point B in Istanbul – specific stations and all. On the contrary, it failed to give basic Apple us -technical support as how to hide a photo on iOS. Others fails include false that Americans cannot get a visa upon arrival in Lebanon and that Mexican citizens do not need a visa to enter the United States legally.

Why Apple’s AI -Chatbot is hidden

Apple’s LLM in the shortcut app is not chatgpt, but it is not entirely useless either. It apparently uses the same model that drives writing tools and the summary features across iOS. If you feed it a large wall of text and ask it to paraphrase or rewrite it, it will be done reliably. But why should you do it when the native writing tools have a superior UI/UX?

Mainly because Apple’s LLM-powered chatbot, as shown above, is prone to hallucinations and often provides safe wrong answers. Of course, it answers many questions correctly, but it maintains the same confident tone when providing incorrect information. Thus, you cannot really tell unless you are already aware of the requested response that defeats the point of asking. In Apple’s defense, all answers indicate that you need to check for errors.

It is likely to change that when iOS 26 arrives and develops certainly with Apple Intelligence’s Siri capacities. Apple gave no indication under WWDC that it provided a localized chatbot as part of iOS 26’s Apple Intelligence features, so it probably won’t increase to the level of chat or gemini yet. But you can try it if you want, which is as close to a demo as we will get.